Generative AI is being applied to accelerate content creation, summarisation and translation as well as adding greater power to automation, conversational programming and cyber security. All of this is happening across a huge variety of problem domains and the technology that is enabling it has grown out of decades of academic and industrial research and evolution.

But with increasing adoption and commoditisation, just how accessible has AI become? Time to fire up 'hello world'...

Instil's Approach

We formed a team to start digging into this area, attempting to embrace the “Be curious and collaborate” mantra. Research and development can be fun as a developer as you are playing with the new and shiny but it can also be pretty stressful - lots of uncertainty followed by reading followed by more uncertainty etc.

In traditional development work, we tend to focus on the problem the client is facing and build an understanding of that first rather than jumping straight to a software solution. But with R&D it can be tricky to balance this approach with the need to learn, experiment and constantly refresh and expand your skillset as a developer. We therefore invested time documenting our learning goals, identifying the questions we needed to answer and determining if we could frame our learning around a business need, not just do learning for learning's sake.

Getting a good balance of both theory and practice was another goal we had for the team. Making time to research new areas and dive into details is important but being able to reinforce any learning by actually building something tends to lead to longer lasting benefits for the people involved.

Learning the Technology and Concepts

One of our first challenges involved being able to decipher some of the terms and concepts that cropped up in our early research. Google’s Learning Pathway for Generative AI provided a good stepping stone - covering among other things:

- Generative AI vs Discriminative AI

- Large Language Models

- Encoder/Decoder architecture

- Transformers

- Responsible AI

- Prompt tuning

- Retrieval Augmented Generation

The team went through the training materials together and then split up to create a mixture of small spikes, blogs, demos and lightning talks that we could share with each other and the wider Instil community. This proved quite challenging but felt appropriate to do in the name of transparency - it meant we could share what we were doing but also that we were very much still learning about this as we go.

In spite of the detail we went through here it still felt quite shallow - so we decided to start to build out some prototypes. A lot of what we worked with initially was a combination of AWS and GCP AI services in tandem with Jupyter Notebooks - this helped bring some of the concepts to life for the team and also fed into some product ideas.

Go Build Stuff

We decided to look at how we could leverage Generative AI to help with knowledge sharing and discovery within an organisation - we of course used Instil as the test org.

The team began by investigating ways to improve prompt design and prompt engineering. Fine tuning foundation models and leveraging approaches such as Parameter Efficient Tuning Methods were explored along with learning about zero shot and few shot prompting. Our initial experiments saw us look at Amazon Sagemaker as well as Amazon Kendra which gave us useful results but we ran into some blockers around customisation as well as cost.

These early experiments did lead us to look at Retrieval Augmented Generation (RAG) as a general pattern - with the team having access to a long list of data sources containing everything from source code to blogs to training materials to random chat messages, it seemed like a good fit for trying out some RAG techniques to build a virtual assistant.

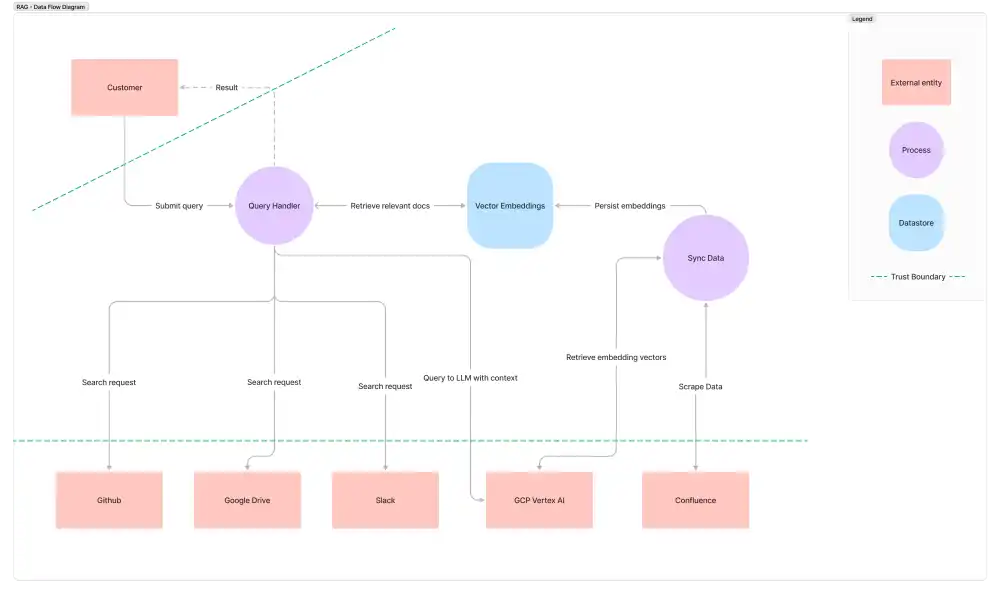

RAG would allow us to augment any prompts that we send into a Large Language Model hosted by one of the cloud providers to make the answers more domain specific. This would involve:

- Building up a knowledge base by scraping data from our various data sources (Confluence, Google Drive, Slack, Github, Bitbucket etc.)

- Converting this content to support easier integration with LLM’s - this process is called Embedding and converts text into numerical representation in a vector space

- Performing a semantic search based on the user's query on this knowledge base of vector embeddings to find relevant items

- Sending the users original query plus the relevant items from our knowledge base to the foundation LLM

After some initial spikes we settled on building this application using Google Cloud Platform (specifically Cloud Functions and VertexAI) with some nice infrastructure as code integration with Pulumi. Due to the availability of tools such as LangChain for interacting with models we stuck with python for the majority of the code.

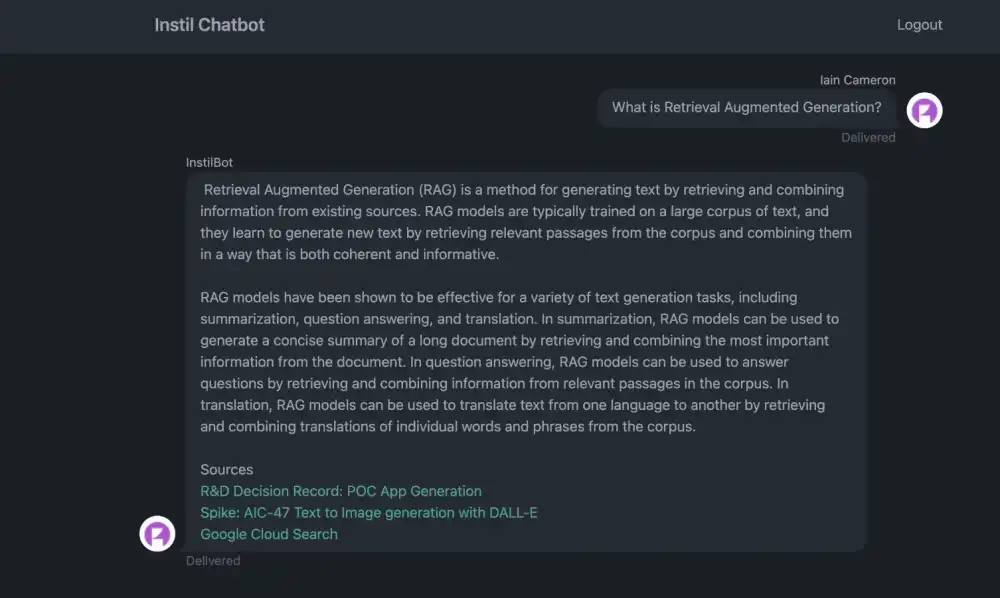

Simple chat app using Retrieval Augmented Generation

Security

One question that cropped up as we kicked off our regular product development process was what does a threat model look like when you introduce LLM’s? What attack vectors and risks are we opening up? How is data privacy impacted?

Recent security incidents have highlighted that LLM data is not immune to being compromised (such as the 38TB of data accidentally exposed by Microsoft AI researchers) so designing for security is important.

In addition to our usual STRIDE based threat modelling approach we also investigated the OWASP Top 10 for LLM list and documented risks and mitigations for them too.

Data Flow Diagram for Retrieval Augmented Generation pattern

The question of data ownership is an interesting one - several well-publicised shifts in policy from OpenAI/ChatGPT show the areas that companies are concerned with and also how LLM providers are having to adapt to build customer confidence. Having opt-out functionality in terms of private data being used to train public/proprietary models is a positive step but due to the maturity levels of these offerings it is still recommended to do your research here.

Taking it a step further, there are options around hosting your own LLMs but as ever there is a tradeoff to be made here - not dissimilar to the serverless vs containers argument but now with the added dimension of how data is used to train models.

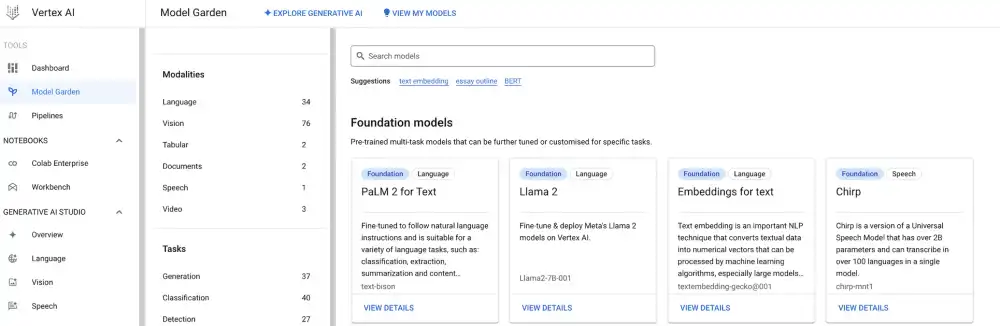

Managed Services

So, what is the current landscape for managed AI services? We built our internal application using GCP VertexAI (alongside some open source models) and found the experience to be pretty positive. Initially we hit some issues around rate limiting when attempting to leverage the hosted models but we worked around these by hosting our own open source embedding model. The questions of scalability and cost are always a factor when looking at the different cloud providers and its no different with AI services, so deciphering cost calculators is as important as ever.

GCP Vertex AI Models

The size and quality of the LLMs offered will also be a factor in the arms race. The partnership announced between Hugging Face and AWS looks to be a positive step forward in terms of promoting open collaboration on training models.

In addition, the launch of Amazon Bedrock and Agents for Amazon Bedrock looks like another leap forward in making Generative AI capabilities available to organisations. Making Retrieval Augmented Generation easier will open up more use cases for companies to take advantage of.

Summary

The rise in availability of AI managed services is a definite springboard for development teams, but they will still need to invest time in learning the underlying foundations of AI, and develop an understanding of the kinds of problem it is suited for (and the kinds of problem of it isn't). AI is not a hammer for every problem.

When developing AI solutions, your teams must still apply the same engineering rigour as they would in any project in terms of security, scalability, maintainability, etc. And finally, they should be aware of the multiplying effect of any biases present in the underlying training data - working with poor models will have far-reaching ethical consequences.

It was fun to build something as a team to support research and learning. Leveraging these managed/serverless capabilities definitely let us build faster - at the prototype stage anyway.

Reducing obstacles is always to be welcomed and ideally this will help teams to build experience quicker as well as ultimately reducing the time to business value for Generative AI applications.